Development and testing walk side by side in any organization, and will also work apart in some scenarios. The use of Independent software testing companies today is huge as they play a vital role and carry immense technological understanding and are solely dedicated to testing different types of software applications thoroughly. You can choose custom made software testing services and solution offerings which allow various companies to get a break from testing activities and focus more on other business areas.

Many businesses now rely on software applications and software development companies which projected to provide fully functional, high performance based and secured software applications. If these factors are omitted, then it could lead to the heavy loss for the businesses. Before the product or the software is ready to release the development team needs to filter the product through many phases and ensure that the application or software is moved to testing, this helps the development teams to find all the minor and major bugs before they release the product and help them to realize the pre-development issues and post development issues that always leads to close down the task before it goes out the market.

The testing phase is essential for any application or software. If it is not performed properly, then it will not only effect the pre-developed applications, but complete software development life cycle, due to this the product / applications might not come out with the quality expected. This is the practice companies perform testing of their software applications just before they are released to the market. This can be terrible as testing in the last stages as on the things to see will be with of many defects which could be functional or nonfunctional. The major purpose of testing is to make sure that software applications work accurately and meet user prospect. The development companies that work on multiple projects might face difficulties in testing for each assignment due to lack of required time. If they want to perform testing in-house, the management might need to maintain multiple teams to handle testing projects which can be costly.

These companies might also face challenges in finding the suitable testing tool for the project, and even face difficulties in finding accurate testers who carry knowledge on current tools than the existing testers have. To maintain development and testing activities is a huge challenge. The Independent software testing service providers’ help vendors to have a better choice for the software development companies that want to get free from testing activities and achieve quality service for their money. These vendors offer only software testing services, and more to it they are able to provide preferred testing services for different applications, where as some vendors also offer software testing services and solutions that provide flexibility and competence for the testing seeking companies. On average, these vendors connect a pool of capable testers who have deeper expertise in the relevant projects, identify right tools for the project and adhere to deadlines. Most independent software testing vendors are equipped with capabilities like best practices, robust test management, effective test execution, monitoring, global delivery, and 24/7 support. This will help a software development company accelerate time to market.

...

Read More

Today, customers are looking for the best than the better in everything and want it quick along with seamless digital experience, so will it happen, but how?

Let us look how digital experience and digitalization will help us improve our business.

We should thank companies like; Apple and Amazon who anticipates that every organization should deliver products and services rapidly, with a seamless user experience.

Consumers are looking for the ablity to login to their electricity account and check their electricity consumption online. They go to the telecommunications dealer and to buys a smartphone and expecting it to activate instantly. They even looking at the banks to approve their personal or mortgage loan instantly. They expect instant approval of services whether it private/public sector.

They even wonder why they need to provide identification proofs and financial statements to the banks when their employer is crediting their pay checks every month on-time.

Customers are demanding for instinctive interfaces, , personalized treatment, persistency and consistency, real-time accomplishment, negligible errors and 27/7 availability. This behavior of the consumers are changing rapidly they are looking for more comforts and customizations.

It’s more than a greater customer experience, nonetheless; when establishments get it right, they can as well tender aggressive pricing because of lesser overheads, enhanced and well equipped operational controls, with less risk.

Enchant the Customer

To catch hold of their consumer expectations organizations must gear up the process of digitization of their business. But it should be more that the automating a current process. Organizations must rethink to reinvent the operations process, including a quick decision making strategy, permit the less number of required steps, speeding up the documentation process parallel efforts should be taken with fraudulent and regulatory issues.

Operating models, skills, organizational structures, and roles need to be redesigned to match the reinvented processes.

Operating models should be accustomed and redesign to facilitate customer insights better performance tracking capabilities, and decision making abilities. The process of Digitization often requires new skills combined with that old wisdom.

Nevertheless to say that There are huge benefits by the digitizing processes, companies can save cost upto 90% and TAT (turnaround time) enhanced by several orders of magnitude.

For example one financial institution digitized its mortgage application, assessment process decision making process, which gave the organization of ability to cut the cost per 70 percent and reducing the time to preliminary documents approval from numerous days to just in minutes process.

A telecom service provider can up with a system where customers get the feasibility to activate their new phones without the involvement of the backend department. Through digitization process insurance companies automatically arbitrate a large share of its simple claims. Upgrading from paper and manual process with software allows organizations to collect the required data automatically which also improves the process performance, while reducing the cost and risks. Digitization also helps manager to address the issues before they become critical. Supply chain quality issues can be acknowledged and resolved by monitoring purchaser business activities and feedback.

Many top organizations are reinventing their processes, testing the whole lot of things connected to an existing process and renovating it using cutting edge digital technology.

This approach is done through series of process combining advanced and traditional process, like lean and agile.

Hit Factors

Companies in every sector can gain knowledge from the practices conducted by other organizations that have implemented this successfully.

The process of Successful digitization implementation starts by developing a scheme for the future state for each process keeping aside the current constraints and reducing the turnaround time from days to minutes. Once the future scheme has been developed constraints will be revisited. Organizations should always focused to confront each constraint.

Recurrently checking the consumers experiences not only boost effectiveness in definite areas but also helps to address some burning issues. But this is not a complete recipe to deliver great user experience. To enrich the user experience the digitization team should receive spport from every corner involved in user experience.

Tackle the End-To-End Customer Experience

But it will never deliver a truly seamless experience, and as a result may leave significant potential on the table. To tackle an end-to-end process such as customer on boarding, process-digitization teams need support from every function involved in the customer experience.

To achieve this, some companies are creating cross functional and start up style units, which is way to involve all the stakeholders and users concerned with the end-to-end user experience. Members are often collaborated to achieve better lines of communication and guarantee a true team effort.

Build Capabilities

Resources on Digitization are in short supply, so successful portfolios put emphasis on construction in house capabilities.

The aim is to provide excellent service with highly skilled staff with the intention that can be called upon to digitization quickly. Organizations should hunt for faculty externally to meet the requirement for new roles, and skill sets, like user-experience designers and data scientists . To achieve this, managers selected in the first wave to pilot the transformation must be carefully picked, and he/she should be ready to commit with the organization for a long time well trusted in the organization,. It is also essential that the staff has the ability to build the necessary technology components in a high proficient way so that they can be able to be reused across processes, maximizing economies of scale.

Enter Blog Details here...

...

Read More

Two development firms in Big data and Analytics space; DATA -Tableau and Hortonworks saw a critical time when their data released missed the forecast by 0.05$, and dropped their stock by five percent. This is what going frequently with companies and no one has any clue on what is going on with BI business Intelligent and Hadoop space. Should companies run from BI and Big data space before it completely collapses?

Instead of focusing on the sensational headlines; the investors and technology corporate leaders should also focus on the missed forecasts as they leave some clue on some important analysis and trends which will help them to grow.

While the companies need to look at their results in the context of the industry as a whole; which will show the exact results as per the worldwide analysis. As per the Gartner’s analysis for worldwide dollar-valued IT; it says that IT spending has grown in 2016 at a flat percent of 0.0. However, 35% of growth is fairly incomparable by this benchmark, and if we look at Hortonworks’ results for this gone quarter: then the total revenue grew by 46% year over a year.

This means, the Investors’ expectations are growing high and even they are tough to manage. To manage this kind of issues the industry observers and technology buyers should standardize the performance of both organizations against the rest of the industry before they make a knowledgeable conclusion.

As per recent survey —Teradata also reported revenue and its business shrink by 4% Year over a year. Leaving other things remaining equal, the analysis says that Hortonworks could generate more revenue than Teradata by 2020.

Let’s look on some of the data analysis pitfalls you should avoid before you are sucked in.

Confirmation Preference

If you have a proposed explanation in your mind; but you are only looking for the data patterns that support it and ignore all data points that reject It. Then let us see what will happen.

First, analyze the results of that particular patterns performed well and find the conversion rate on the landing page. This will help you to really perform high than the average you think. By doing or following such analysis you can use that as the sole data point to prove your explanation. While, completely ignoring the fact of those leads will qualify or the traffic to the landing page will be sub-par.

There is again a thumb rule which is very important to remember, you should never approach data exploration with a precise conclusion in mind; as most of the professional data analysis methods are built in a way that you can try them before you actually go and reject your proposed explanation without proving it or to reject it to the void.

Correlation Vs Cause

Combining the cause of a fact with correlation somewhat will not show any action. While, when one action causes another, then they are most certainly correlated. However, just because two things occur together doesn’t mean that one caused the other, even when it seems to make some sense.

You might find a high positive association between high website traffic and high revenue; however, it doesn’t mean that high website traffic will be the only cause for high revenue. There might be an indirect or a common cause to both that may help to generate high revenue more likely to occur when high website traffic occurs.

For example, if you find a high association between the number of leads and number of opportunities from a classic B2B data quest, then you might gather a high volume of leads with a high number of opportunities.

Here are some more things that you need to watch when doing data analysis:

• Do not compare unrelated data sets or data points and conclude relationships or similarities.

• Analyze incomplete or “poor” data sets and make proper decisions based on the final analysis of that data.

• Do not analyze the data sets without considering other data points that might be critical for the analysis.

• The act of grouping data points collectively and treating them as one. Which means, looking at various visits to your website and creating unique visits and total visits as one and inflating the actual number of visitors and converting it to the best conversion rate.

• Do not ignore any simple mistakes and oversights which may happen anytime.

...

Read More

Digitalization, digital networking, research, network automation, education and educational networks; are all these the buzz words of recent technology and for education institutions? Why everyone is talking about digitalization and networks, how are these both interconnected; and what is network automation and education networks are what we are going to know on this blog today .

While what the smaller research say about the education networks and individual campus networks; we can say that both stand for the same aim which is implementing education through current network automation strategies and tools. Are these tools, strategies, and significant efficiencies or the network automation wave more hype than these smaller institutions?

Let us look at some of the regional networks, how they are succeeded and what are the challenges involved in this path of deploying various network automation with relatively small and outsourced staff and support. Also discussed will be the experience of working with this diverse technology and how it increased the awareness about the possible proposed value of network automation and how regional’s can assist smaller schools through this network path.

Digital learning and collaboration have become an essential part of today’s education. From k-12 schools are implementing the methods of digital learning. These blended learning methods and one to one computing programs are reshaping the classroom studies and engaging more students to become tech-savvy’s. The higher educational institutions are announcing BYOD- which means bring your own device concept; students are also allowed to use their smart phones, laptops, tablets and other gadgets to be connected. This helps the institutions to meet the unprecedented demand for connectivity with high performance and to get recognized as highly reliable campus and data network center solution provider.

Challenges:

The hike of latest technologies, mobile devices and growing appetite for applications and rising security concerns are placing new burdens on educational networks. To meet these challenges, schools are expanding their networks to meet student and faculty expectations for high performance, highly reliable, and always-on connectivity.

The school network is always mission-critical, and downtime will be more and un-tolerable when class lectures, research projects, assignments are involved. We can see diversity and richness using these educational applications as learning is increasingly leveraging interactive curricula, collaboration tools, streaming media, and digital media. The success of the Common Core Assessments hugely depends on connectivity. The higher education, universities, and colleges that have poor quality, non-ubiquitous network access, quickly discover that this is affecting their registration/enrollment rates.

The other challenge is with the number of Wi-Fi devices and the types of devices students bring to a university or college campus; Students commonly have three or more devices like; smartphone, tablet, laptop, gaming device, or streaming media player and expect flawless connectivity. While higher education is deploying wireless IP phones for better communications, IP video cameras to develop physical security, and sensors for a more efficient environment. The projections for the Internet of Things, which will connect hundreds of billions of devices in a few short years, are nothing short of staggering.

The trends that educational networks are looking today for students, faculties and administrator expectations is that of for the connectivity which is rising and the complexity and cost of networking are also growing exponentially. In addition to this growth, the “adding on” to networking equipment for old designs is causing the network to become ever more fragile. IT budgets are tight, and technology necessities are growing faster than the funding.

What if schools took a step back and had a unique opportunity to proactively aim their networks to meet the challenges which hit today and tomorrow? What if deploying the network was simple, not a manual, time-consuming chore? What if your initial design and build could scale for years to come, without having to build configurations on the fly every time? What if networks were easier to plan and build, configure and deploy, visualize and monitor—and had automated troubleshooting and broad reporting?

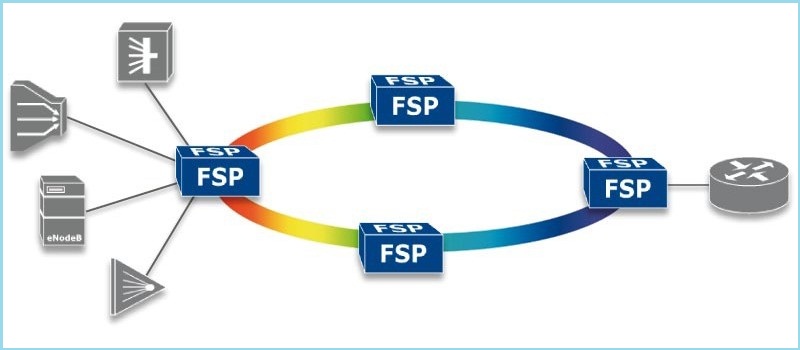

The tools which are of high configurations help the educational networks are Juniper Networks, HP, CODE42, DLT, EKINOPS, Level 3, cloudyCluster, OSI and much more. It’s time to update the educational institutions towards the design, building, and maintaining the network and taking the advantage of automation and modern management tools to create scale, consistency, and efficiency.

...

Read More

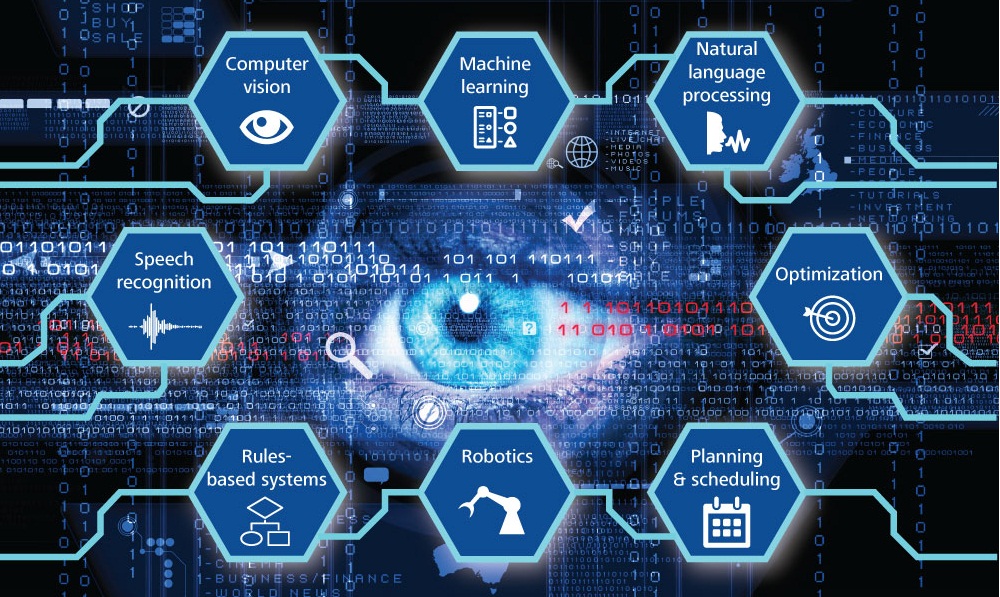

Artificial intelligence has been spread over a very large area of computing since the beginning of the computer, but we are getting closer than ever with cognitive computing models. What are these models and why are we talking about it today.

Cognitive computing comes from a mash-up of cognitive science which is the study of the human brain and how it functions and a touch of computer science and the results will have far-reaching impacts on our private lives, healthcare, business, and more.

What is Cognitive Computing?

The aim of cognitive computing is to replicate human thought processes in a programmed model. It describes technology platforms that broadly speaking, and which are involved in self-learning systems that use data mining, natural language processing, and pattern recognition to mimic the way the human brain works. With the goal to automate the IT systems that are capable of solving the problems without requiring human assistance the Cognitive computing is growing very fast.

Cognitive computing systems use machine learning algorithms; which repeatedly acquire knowledge from the data fed into them by mining data for information. These systems treat the way they look for patterns and as well as the way they process data so they have become competent of anticipating new problems and modeling possible solutions.

Cognitive computing is used in numerous artificial intelligence applications (AI), including expert systems, neural networks, natural language programming, robotics and virtual reality. While computers are proved the faster machines at calculations and meting out the humans for decades; these machines haven’t been able to accomplish some tasks that humans take for granted as simple, like understanding natural language, or recognizing unique objects in an image. The cognitive computing represents the third era of computing: it from computers that could tabulate sums (the 1900s) to programmable systems (1950s), and now to cognitive systems.

The cognitive systems; most remarkably IBM and IBM +0.55%’s Watson, depend on deep learning algorithms and neural networks to process the information by comparing it to an education set of data. The more data the systems are exposed to, the more it learns, and the more accurate it becomes over time, and this type of neural network is a complex “tree” of decisions the computer can make to arrive at an answer.

What can cognitive computing do?

As per the recent TED Talk from IBM, Watson could ultimately be applied in a healthcare setting also, this helps the administrative department of healthcare to collate the span of knowledge around conditions, which include the patient history, journal articles, best practices, diagnostic tools, and many more. Through this, you can easily analyze that vast quantity of information, and provide your recommendations as needed.

The next stage is to examine, which will be proceeded by the consulting doctor, who will then be able to look at the evidence and based on the recorded evidence the treatment options will be released based on these large number of factors including the individual patient’s presentation and history. Hopefully, this will lead to making better treatment decisions.

While in other scenarios, when the goal is not to clear and you look to replace the doctor, and the doctor’s capabilities by processing the humongous amount of data available will not be retained by any human and thus providing a summary of potential application will be overdue. This type of process could be done for any field such as including finance, law, and education in which large quantities of complex data will be in need to be processed and analyzed to solve problems.

However, you can also apply these systems in other areas of business like consumer behavior analysis, personal shopping bots, travel agents, tutors, customer support bots, security, and diagnostics. We see that there are personal digital assistants available nowadays in our personal phones and computers like —Siri and Google GOOGL -0.21% among others, which are not true cognitive systems; and have a pre-programmed set of responses and can only respond to a preset number of requests. But, as tech is on high volume we will be able to address our phones, our computers, our cars, our smart houses and get a real time in the near future when thoughtful response rather than a pre-programmed one.

The coming future will be more delightful for us as computers will become more like humans and they will also expand our capabilities and knowledge. Just be ready to welcome the coming era when computers can enhance human knowledge and ingenuity in entirely new ways.

...

Read More